This is a story,

all about why,

I made my mother a picture frame,

powered by AI.

Buying christmas gifts is hard. They have to be unique, and meaningful, and different from the gift that you bought last year - but also better. Gah! It's a nightmare - it's a treadmill. No wonder we all drink so much at Christmas.

I'd have thought that gifts for family members would have become easier to buy as the years passed. These are the people that you've spent the majority of your life with - so you'd know exactly what they'd want / need, right?

In my experience, my family want less and less and that makes it harder and harder to pick out a gift that I think shows them how much I love them.

My mother is the worst of all. It's gotten to the point where for the last 5 years the conversation around gifts for her has gone something like this:

Me, 1 month before Christmas

Me: "Mum, is there anything you'd like for christmas? Name it".

Mum: "Oooh, I'll have think about it... let me get back to you."

Me, 2 weeks before Christmas

Me: "Mum, did you ever think about what you might like for Christmas? It's getting a little close now..."

Mum: "I don't really know really... let me think about it a little longer"

Me, on Christmas eve:

[ Running around Boots throwing everything in a trolly ]

I am certain that the stress from this process has shortened my life in the order of years.

So, what am I to do? My mum is never going to tell me that she wants anything, I have no idea what to buy her myself (an Amazon parcel just feels so impersonal), and Christmas isn't going to be cancelled anytime soon so I have to keep buying things.

I know! I'm a Maker - I'll make her something.

Pictured: Me, when I come up with a fun project to make

Pictured: Me, when I come up with a fun project to make

Now, I've had this thought before, and I've always managed to disaude myself by remembering a clip from Malcolm in the Middle. In it, Reese makes his mother Lois a gift for her birthday...

It's a potato with a nickel stuck in the top of it with "I love you mom" written on the side in marker pen.

Though I'd like to think that I'd be able to come up with something better than a nickel stuck in a potato, I've always worried that I'd end up making a metaphorical potato instead. If I'm going to make something, it has to be something that my mum is going to love.

Like every grandmother, my mum dotes on her grandchildren (the daughter and son of my sister, Grace and Freddie), but they don't live nearby, so she doesn't get to see them as much as she might like.

Now, we live in the social media age - even if we're not always able to be present in each others lives, we can still keep track of each other and feel like we're a part of these things by looking at social media posts. My sister is a prolific poster to Instagram, every photo she puts up is of my niblings (yep, that's the right term. Google it.) growing up and living their lives: Enjoying the snow, going to school, losing their baby teeth. All of these precious moments we can experience vicariously through social media.

My mum has no interest in joining social networks (she finds them overly complex) but she still wants to see these snapshots of life as they happen. I can be certain that anytime I go home the question that follows "How are you?" will be "Are there any new photos of Grace and Freddie?". I don't mind giving my mum my phone to flick through photos of her Grandkids, but we all know the anxiety of watching someone else flick around on your phone.

So I thought "I know! Why don't I make my mum a picture frame that filters my Instagram feed to show only pictures of my niece and nephew!".

So that's exactly what I did.

All the bits and bobs

There's quite a few parts to put together to make this sort of thing work, so here's a list of the parts that I used, followed by an explanation of what all these things did and why.

- A Watson Visual Recognition model that can recognise my niece and nephew.

- A Chrome extension for parsing my Instagram feed

- Several serverless functions for processing the images:

- A function for receiving a list of the possible images to analyse and check whether we've looked at it before (the "checker" function)

- A function for retrieving and storing each newly found image (the "getter" function)

- A function for detecting and extracting faces from each image (the "face-extraction" function)

- A function that analyses each face to figure out if any of them are either my niece or nephew, and then stores the original photo for display if they're found (the "classifier" function)

- A function for listing and retrieving the images in storage that contain niece and/or nephew (the "list-and-retrieve" function).

- An Electron app which calls the final serverless function and displays the images.

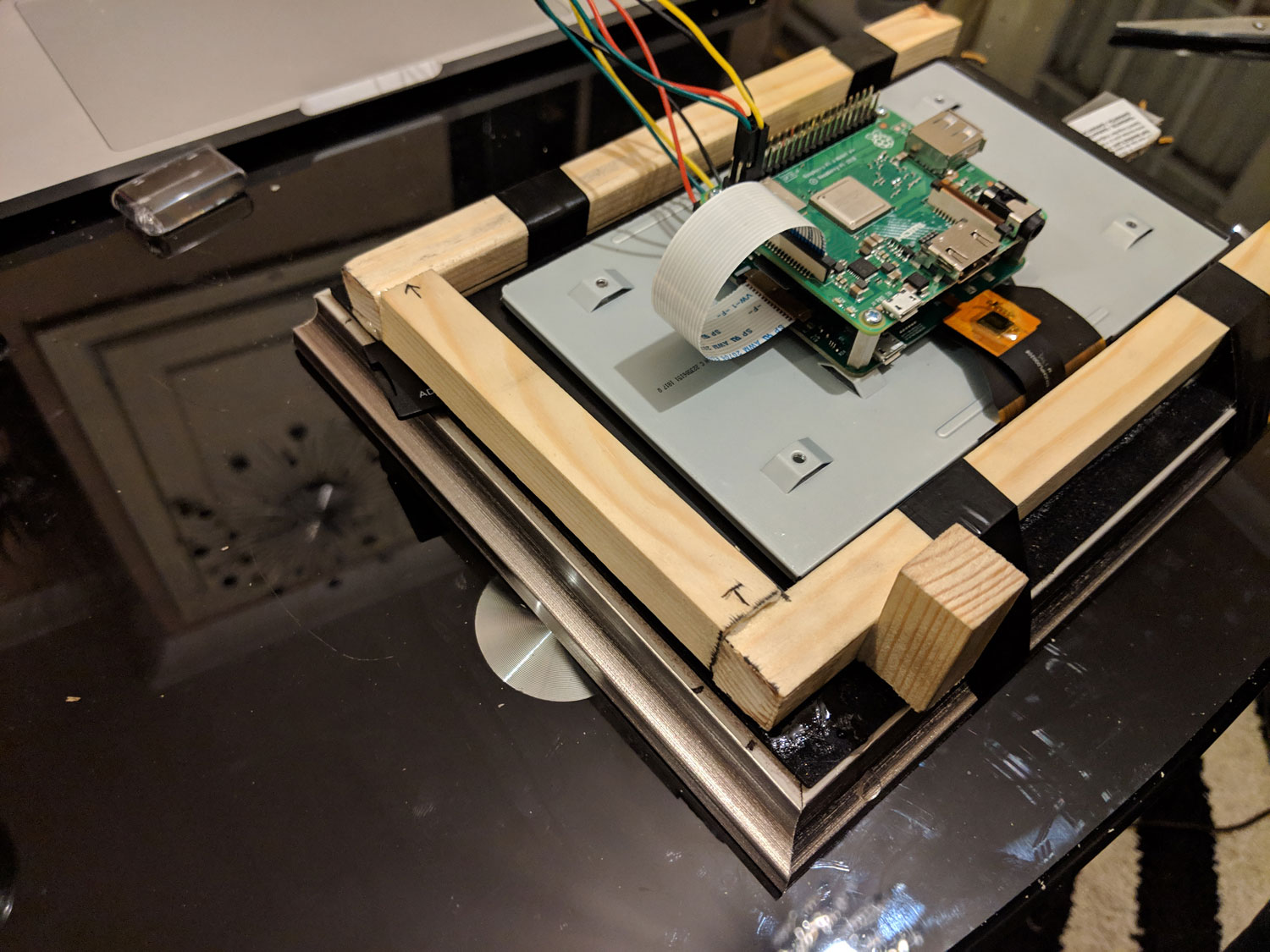

- A Raspberry Pi (Model A+) with a Raspberry Pi official touch screen to run the Electron app on.

- A traditional picture frame that fits in with my mums decor, modified to to contain a screen instead of a printed image.

So, let's work through all of those bits, what they did, and why I chose to do it this way.

Training Watson to find my Niblings

The very first thing I did before jumping into this project was check that I had enough images of my niece and nephew for Watson to be able to tell them apart from both each other, and other people too with a reasonable confidence level.

This was a very simple process, I simply went though my phone photo albums, Facebook, and Instagram accounts to find as many photos as possible for them.

Now, Freddie and Grace are still very young, to my continued astonishment, they've grown up and changed so much over the last few years. Whether I had enough data to detect them as they are now, as opposed to how they looked when they were babies remained to be seen at this point. All-in-all, I collected roughly 40 pictures of Grace and Freddie each. This might not sound like very many, but Watson Visual Recognition is very good at building reasonably accurate classifiers with a very small dataset (so long as you don't have too many classes of similar things).

I wrote a few small Node.js scripts to create and test the custom classifiers after they finished training. I made sure to add the `X-Watson-Learning-Opt-Out` header to each request to train the classifier, this keeps my training data from being persisted anywhere other than the instances that I control in the IBM Cloud. One of the caveats of this is that you can't retrain an existing model with new data, you have to create a new model each time. That's a trade off I'm willing to make.

When I got in my first results, I was pleasantly surprised! I was getting consistent confidence score of 0.7 - 0.8+ for both my Grace and Freddie classes! Success!

Not quite. Upon examining the results, I noticed that some of the images that have been classified as my niece and nephew were in fact my sister and her husband instead - y'know, the mother and father of my niece and nephew. Funnily enough, my niece and nephew look very much like their parents! So, with this in mind I added a negative class to my dataset consisting of my sister, her husband, and part of the KDEF faces dataset to help Watson tell the difference between a human face, the faces of my niece and nephew, and the faces of my sister and brother in law.

After creating a new model and having it train again there were far fewer false positives (not perfect but good enough to get going). So I decided to start on building the other bits and bobs that I'd need.

At this point, I have a way of filtering images to find those that have Grace and Freddie in them. For each image I want to analyse from now on, all I have to do is post it up to Visual Recognition and ask it to classify with my custom classifier instead of the default classifier.

Now that I can classify images, it's time to get some images to classify!

Getting pictures out of Instagram

This was, by far, the hardest part of this project. We all know that social networks like their walled gardens, but Instagram has gone extra on this.

There is an API, but it's all but useless unless you're a business partner of Instagram, which I'm not. It's also got a deprecation notice so it's not guarenteed to work for very long either, which is less than ideal.

I tried to automate logging in to Instagram's web UI and making requests on my behalf to get images periodically, but Instagram has the most overzealous automation detection I've ever come across. I spent a week trying various approaches to get at Instagram to take a look at the photos in my feed. It was impossible to do reliably.

So, Chrome extension it is. The nice thing about Chrome extensions is that they can operate on a page on my behalf. So, rather than having to worry about logging in and all that jam, whenever I use the Instagram Web UI (which is my preferred UI) it'll scrape the page for any images included in posts and then send up a list of their URLs to my first serverless function for processing.

Checking the images (The "checker" function)

So, once the Chrome extension has triggered my serverless function (the "checker") its job is to work through all of the URLs sent up to it and check whether or not the image at this URL has been processed before.

It does this by doing a HEAD request on my obect storage instance (set up in IBM Cloud, but it could work with any S3-compatible storage service) to see if a file with the same ID as the UUID in each URL already exists.

If it does find a file, nothing happens because we've processed it before. If it doesn't find anything, it triggers the "getter" function to handle this image.

Getting the images (The "getter" function)

So, once an image has been found that we've not yet checked for pictures of my niece and/or nephew (the and/or is important, I don't just want to find photos with both of them, I want to find photos of either one of them), we want to go and grab a copy of it that we can use for analysis.

This is simple enough. When the Chrome extension triggers the "checker" function, it passes up everything it needs to find the images afterwards too (the URL, UUID, and file name). When the getter function is triggered by the checker function, it takes the URL, goes and gets the image, and then stores it in an object storage bucket with the filename it was passed.

Finding the faces (The "face-extraction" function)

Once those images have been retrieved from Instagram, it's time to find the faces in them. We only want to extract the faces because that's what we're interested in classifying (more on that later). This is a very simple call to Watson Visual Recognition, instead of classfying an image, we're asking Watson to identify and return the bounds of the faces so that the function can extract them from the image.

Once the faces have been found (if there are any to be found) they're extracted from the main image, and then stored in a new bucket in my object storage. Following on from this, the "classifier" function is triggered for each face detected.

Checking who the faces belong to (The "classifier" function)

Each face that's extracted from an image is then run through the classifier function. This function takes the filename of each face, retrieves it from my object storage instance, and then sends it back to Watson Visual Recognition where it's evaluated by a custom model that I've trained on images of my niece and nephew.

If either my niece or nephew are found with a greater than 0.65 confidence score (1 being a perfect match, 0 being no match at all), the original image containing the faces is taken from the first object storage bucket and put into a new bucket for display.

Getting a list of images to display

Now we have a bucket of images that are only of my niece and nephew, but we need some way to know what the names of those images are, and then retrieve them for display.

This is where the "list-and-retrieve" serverless function comes in. When triggered by an HTTP request (a web action) it will do one of two things... it will either

- List the available files in the bucket with only my niece and nephew in them.

- Retrieve a file from the bucket and return it in the response of the HTTP request if a valid file name is passed with a query parameter.

With this function, we now have the means to find out what images are available, and get them from storage for display with ease.

Displaying the photos (with an Electron app)

Now I have way of getting the images, I need a way to display them. I could have built my mum an app for her Android phone, or a web page that she could browse to for viewing, but these are technologies that my mother is not too comfortable with, and the point of this project was to get the technology out of the way, not add more for my mother to deal with.

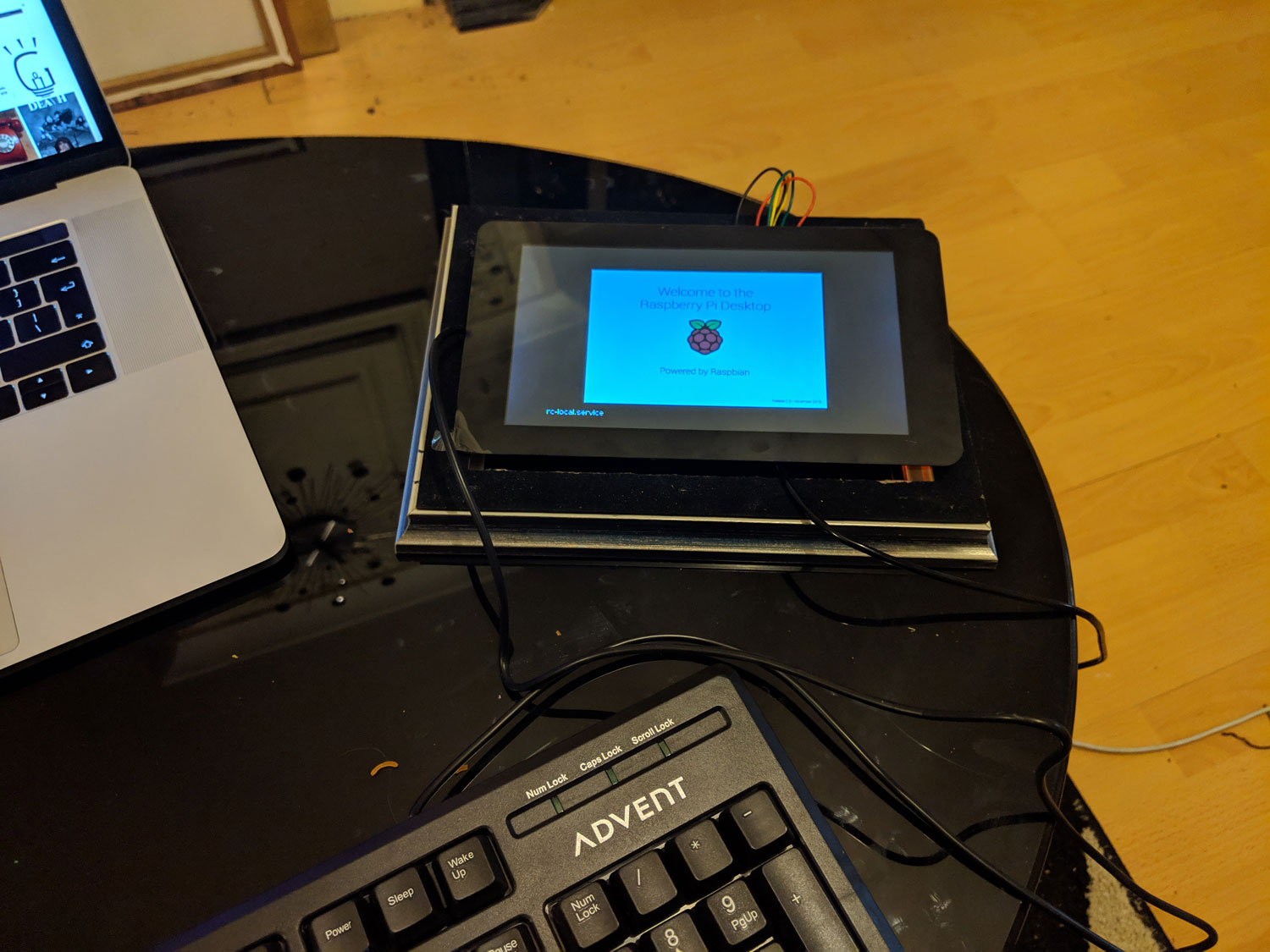

As someone who is very comfortable with web technologies, I decided to build an Electron app. The documentation is extensive, is well supported by the community, and can be made to run full screen on a Raspberry Pi on startup (which I had decided to make the physical display out of).

An Electron app is very simple. You provide a JavaScript file for handling interactions with the operating system (like rendering a window), an index.html file for displaying your content, and a package.json file which describes what each of these files is, and what dependencies (if any) are required.

The app is simple. On start, it calls my list-and-retrieve function and gets a list of all of the images of my niece and nephew that have been identified, and then gets each one from storage for display.

The images are displayed one at a time for 15 seconds each before it fades to another image. There's a button to lock the current image so that only it displays (in the instance where mum has a favourite photo), and there's a button which shows all of the images if my mum is looking for a particular photo.

After I'd put all of that together, all that was left to do was to package up the Electron app for my Pi. I used electron-packager to do this. It's a great CLI tool which takes all of the pain of compiling apps and dependencies for different platforms. You run the command with your target platform selected, and then you get a lovely applcation binary which you can copy to your desired system and run. Easy peasy!

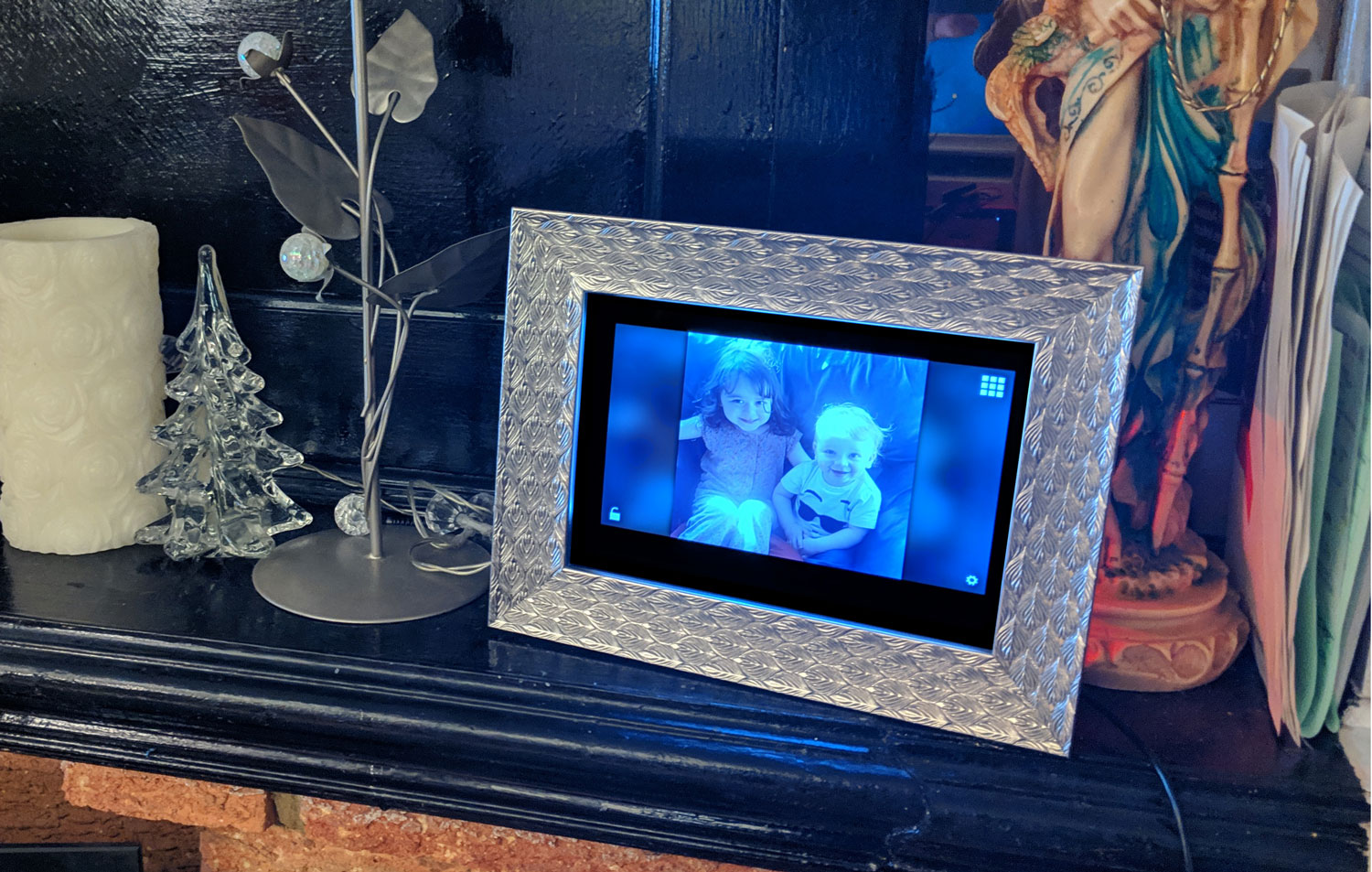

Pictured: A screenshot of the Electron app showing my niece and nephew being adorable in the snow.

Pictured: A screenshot of the Electron app showing my niece and nephew being adorable in the snow.

The final form

That's all the software done - time to build a photo frame!

I told my mum what I intended to make for her, so we popped down to a local store in Luton to choose a picture frame that she'd like. We don't share a similar sense of aesthetics when it comes to homewares, so I figured it would be best that she picked something that she liked that I could adapt rather than forcing something of my sensibilites onto her living room.

Mum picked this lovely silver-colored picture frame. I felt that the frame had to be big enough so that it could accomodate the display that I'd chosen for my Pi to run the app through (an official Raspberry Pi display), but not so big that the image would be dwarfed by the frame itself. This was a little trickier than I had envisaged, as despite both the frame and screen being very standard sizes and ratios for their form, the two form-factors do not align. Eventually, we found something that could accomodate the screen, wouldn't look out of place in my mum's living room, and wasn't too big that it would look weird.

I didn't want to put the frame together in such a way that the screen would forever be affixed to the frame, too many things could go wrong in the construction, and debugging any issues that might arise in the future could be troublesome, so I opted to build a frame to hold the Pi on top of the picture frame itself.

Pictured: The construction steps of assembling the frame to hold a Pi and an official display screen.

Pictured: The construction steps of assembling the frame to hold a Pi and an official display screen.

This would allow the Pi and it's display to be slotted in and out as needed, but wouldn't fall apart while it was being displayed.

And that's pretty much it. Now the frame sits on my mums mantelpiece and shows her picture of her Grandkids.