Leap Motion Controller is officially released next week, and we've been working on ways to use it to make something cool and just a little bit different. As is usually the case, we drew inspiration for our latest bit of work from an unusual source – in this instance, Lionel Richie's music video for 'Hello'.

The video is brilliant, with Lionel pining over a blind girl just enough that it could be considered stalker-ish. But hilarity aside, if you get to the end of the video there's a bit where the blind girl, seemingly enamoured with Lionel's pursuit, has carved his face into a ball of clay. So that's what we decided we'd try to make with the Leap – a big ball of clay that you can mould a face out of.

So we had to work out how to go about this. The Leap Motion software also acts as a kind of server that sends out the current data it has recorded about your hands via WebSockets to anybody listening. What's nice about this is that practically any type of application can get at this data stream so you don't have to sit down and learn an entirely new language or work in an IDE that you don't like. I've dabbled in programming across quite a few different platforms and IDEs, but primarily I'm a web developer, which is why my first thought was, "Hey! WebGL is awesome, let's do it with that".

WebGL isn't quite like any other browser-based API you've dealt with before. You can get far closer to the GPU than with any other API, and programming objects in 3D space is a far more involved process than most web devs would be used to. I'd used Unity and Maya in the past but that was quite some time ago, so I was pretty much going at this from scratch. Firstly, I Googled around for a place to start, and wound up on MDN's WebGL documentation. If you could describe my reaction using only three characters, it would be o_O. Parts of the code looked familiar; it was definitely JavaScript, but not JS as I know it.

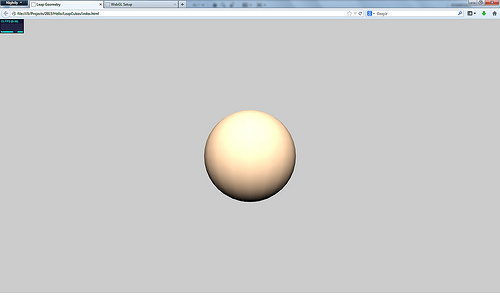

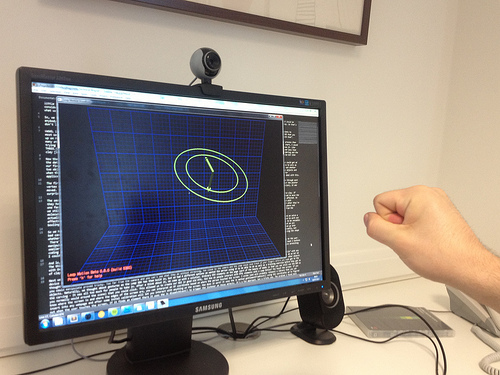

Like many others, I decided to use THREE.js by the fantastic Mr Doob et al. THREE.js abstracts the really complex parts of WebGL so that devs can spend more time building what they want and less time trying to figure out how to use things like shader languages. Initially I followed the book WebGL: Up and Running to get to grips with 3D rendering and the THREE.js library. The book talks you through creating objects, adding materials, adjusting lighting etc. Pretty quickly I was making objects...

...and then making them look just a little bit like clay.

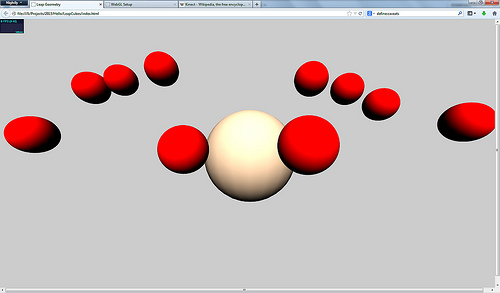

Now that we had an object to play with, we needed to work on a way to interact with it. That's where our lovely Leap Motion comes in. Using that WebSockets connection I mentioned earlier, we could get at the data describing where our fingers are in front of the screen. Ten cursors! Just what we need. In addition to having our ball of clay in the middle of the screen, I then created 10 little balls to acts as our fingers in the WebGL world.

Whenever our Leap detected a pointer in space, we would then move the corresponding pointer in WebGL. Now here's where the tricky part starts. It's quite easy to work out whether two objects are colliding, particularly if they are regular-ish shapes (like cubes or spheres) and don't need to change very much. You simply measure the distance between the two centres of those objects and when that distance is less than the combined radius of the two objects, they are colliding. But what if you want to effect a change based on that collision? While the distance measuring is great for applications that involve platforms or walls (like Pong or Mario), you have no way of knowing where on the two objects those things collided, just that they did. I came up with two solutions to deal with this.

The first solution was to check the distance between the pointer and the centre of the clay. If one or more pointers were close enough to the clay to manipulate the shape, I would iterate through each vertex of the clay and check the distance between it and the pointer. As the pointer approached the centre of the clay, I used some interpolation to work out where the point that we were effecting on the mesh of the clay should be. Interpolation is the process of working out where something should be at a given point in time or space between two or more sets of coordinates. You have point A from where the object started, a decimal value describing progress from point A to point B, and then point B.

I used the centre of the clay for point A, the distance of the pointer from the centre of the clay for the fraction value, and the original radius of the object as point B. This lets us collapse parts of the clay's mesh based on where the user has moved their hands. The result was quite severe (albeit pretty cool to look at), but after some tweaking of the calculation I managed to get a smoother denting effect.

The second solution I came up with was much the same but used ray casting. The pointers would cast rays out from their centre and check to see if any of those rays had passed through a part of the clay. If they had, then you would know which face of the clay you had impacted upon, which meant that I wouldn't have to iterate through every vertex and work out where I had impacted on the object. If I only wanted to move the one face then this would have been perfect.

But clay doesn't just shift towards the position you've moved it: there's a certain amount of give and movement in the area around it that needs to be affected. So we still had to work out what part of the clay was next to the point that we impacted, and adjust accordingly. The thing is, shapes in WebGL aren't like shapes in the real world. They aren't constructed with molecules that sit next to each other, and spheres are made up of triangles (polygons) that are put together like an orange peel. So things that to you and I appear to be 'next to' each other are actually about 100 polygons behind in the array that determines the order in which those polygons are drawn.

Ultimately, this meant I was still checking the distance between points on the sphere and affecting them (ever decreasingly) based on their distance from the point of impact. If you want to imagine what the effect is like, try to picture a bowling ball on a trampoline. As the ball bounces further away from the trampoline surface, its effect is reduced. Ray casting turned out to be slightly faster than the first solution, but we liked the result of the first method, so we went with that.

So we had an object we could mould based on input from the Leap Motion Controller – but the effect wasn't great at this point. The object was still quite angular due to a low polygon count. So how could we solve a bad case of "I ain't got enough polygons"? We ramped up the number of polygons of course. The problem with this is that the more polygons there are, the longer it takes to draw them and the longer it takes to work out whether or not we need to adjust them. Bottom line? Performance hit. Annoyingly, the level at which the effect looked anyway decent and smoothed was the point at which the app became so slow as to be unusable.

There were a couple of things we could do to try and sort this out. Rather than increasing the polygon count across the entire mesh, we could increase the number of polygons at the particular point that we were manipulating. This is known as surface subdivision (a method partly developed by one of the chaps that founded Pixar, Edwin Catmull). Subdivision takes the polygons of a mesh and then splits them up into smaller polygons that cover the same area, thus making the object look smoother. I'll be honest, this was way above my head; I understand the theory and I could apply it to a very basic mesh manually, but coding something that handled this dynamically I just could not do. I took another approach instead. I figured that we never actually see – nor need – the back of the clay, so I removed it. By halving the shape I could double the density of the remaining half of the mesh. Nothing extra to count, just a smaller area to cover.

And so denting an object was dealt with for a bit. But what about stretching and extruding? This presented a challenge that ultimately I couldn't solve in a way that wasn't massively confusing to the user. The thing about gesture-based interaction is that it's really quite hard for a computer or developer to work out what the user's intentions are when they interact. Unlike a mouse, which has at least two buttons with on/off states that tell us exactly where and when a user wants to interact with something, we only had data telling us where hands were.

Naturally, the first thought when trying to replicate the stretching of clay is to try and make a pinch or grab gesture. But when you put two fingers together, Leap Motion loses tracking of those fingers, which I can understand – I guess it doesn't fit in with their model of what a hand looks like. (There might be something in the JS API that mitigates this but I couldn't find it – I'd love to hear if you have). That's the difficulty with a natural user interface (NUI) – we have to describe what a grab or a pinch is. On a touchscreen device this is quite simple because you're only dealing with finite points in 2D space. But in 3D space and with a camera, the gesture can wildly differ from grab to grab and person to person, and so far it's not been an easy process to get machines to understand this.

Don't get me wrong, gestures are a great, fun way of interacting with computers – but only, in my opinion, when it's quite a pronounced gesture that has a global usage, like swiping between views in an app, or skipping a track, rather than for delicate manipulations.

For now, gestures for stretching were out, so we thought, "Why don't we have it so that when the hands are inside the clay it can expand?" Another tricky proposition because we're then trying to guess what the user's intention is. Which way should the clay expand when the user's hands are inside the ball? Outwards from the centre? Away from the user's fingers? How, then, does the user take their hands out of the clay when it's constantly moving away from them? Does the user have to move their hands at a certain speed to break out of the clay, or cease interaction altogether in order to start pushing the clay again? See, that's the beauty of a mouse – with the buttons you know when the user wants an interaction to occur. That's why, despite all of the touchscreen goodness we have these days, the mouse (or at least a trackpad) is still king. We could, of course, have had a switch that triggered the moulding/extruding 'modes'. But again, we didn't want people to be interacting with the computer, we wanted them to focus solely on the clay. I think it's going to take a smarter person than me to figure this one out.

We wanted a guide for people to use for their faces whilst moulding, so being in a browser I opted to use getUserMedia. getUserMedia is really cool but that's not what I'm going to talk about here. What was interesting was how we decided to use the grab. Turns out you can map video to WebGL textures (which is fully awesome), so we played with that effect for a bit. As you warp the clay, you get a kind of magic mirror effect; it looks cool and is a ton of fun.

However, the effect distracts from the moulding. We decided instead to use Canvas to adjust the color contrasts of our image (we got that for free in WebGL) and then use it as a mask over the object like this.

We then had to figure out how people were to going to interact with the parts of the program that weren't to do with moulding. We decided early on that we didn't want people to interact with 'Hello' with any device other than Leap Motion. We felt it would distract from the process and miss the point of trying to get people to think of computers as a little more than just point-and-click boxes.

So what could we do? We thought about putting elements inside the 3D scene that people would be able to tap or knock to initiate an action. But placing these objects so that they looked like part of a GUI and not just other objects you could manipulate proved tricky. Too far from the camera and you can't see what they do without straining, too close to the camera and Leap Motion could lose track of your fingers before you even get to the objects. Naturally, we thought about gestures as the Leap SDK has them built in. But there were four different options we wanted to be available to people using 'Hello', which we could also use for a training exercise before they got to the moulding. It was all too much for people to take in.

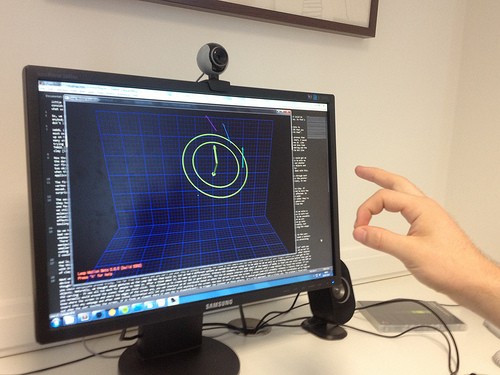

At one point, we even used webkitSpeechRecognition, and while this was fun, we all know how finicky voice recognition can be. So we had a think about and came up with a solution. Ordinary DIV elements on the page that you could click on (part of the beauty of WebGL is that you can still use the DOM as you normally would around the scene). This allowed us to create our GUI in good old HTML. But how do people interact with HTML elements with Leap Motion? You touch the screen. If you know where the screen is and where the edges of the screen are, you can interpret an XY coordinate based on where the person touched the screen.

I wrote (and will probably release) a library that you can insert into any webpage and after some initial calibration will let you interact with anything on the screen. How does it work? It uses the JS method getElementFromPoint(). Based on the coordinates we figured out, when the user touches the screen where there is a corresponding element, the JS dispatchEvent() triggers the listener on those objects. It's a fun approach, but a tricky one. The problem I found most frustrating was that sometimes Leap Motion would lose the tracking of the fingers as it approached the screen – I believe this is because of the reflection of the finger on the screen confusing the sensor. I adjusted the position of the Leap to try and compensate but the effects were only slightly mitigated. Oh well, can't win them all.

Our main use for the touchscreen business was an onscreen keyboard that people would use to name their work at the end of the process. It works pretty well once you've had time to adjust to it – Redwebbers we tested it on kept treating the screen like it was as sensitive as an iPad, but it's not. It's me trying to make an educated guess at where/when you're touching the screen, and takes a little time for the computer to work out what you've done, so can sometimes miss a key press. There's also the interesting quirk that the further you move from the device, the more exaggerated the coordinates become. I think this is because Leap Motion is still essentially a camera. It has a viewing frustum just like the camera in our scene, and as you move further along a 2D plane, the perspective warps the coordinates somehow – just a guess, I don't understand enough about optics to explain this further. I think I could reduce the effect by adjusting how I work out coordinates based on the screen size, but I'll need to do some further reading first.

Anyway, that's a brief overview of the stuff I've had to figure out over the last little while. If WebGL or Leap Motion is something you want to get involved in then just give me a shout. Both of the technologies are fantastic and I'd love to share what I've learned in detail – there's so much more to cover! I never appreciated before how difficult 3D graphics could be, there's so much that needs to be considered when constructing a scene and affecting objects – even without user interaction. I can't wait to see the kind of stuff that comes out with the Leap Dev store next week. Exciting times!