Almost a year ago now, a couple of colleagues and I had the great privilege of being invited to dinner at Gonville & Caius college in Cambridge.

Pictured: Me in the Gonville and Caius dining room.

Pictured: Me in the Gonville and Caius dining room.

While there, I was seated near my colleague Ross Cruickshank and we started talking about low-powered long range radio and how the arrival of pervasive 5G networks may replace some of the use-cases for such kinds of radios (part of our day jobs is being IoT nerds).

How fast is fast enough?

When talking about 5G networks, one invariably arrives at the topic of the promised network speeds. According to the 5G Wikipedia article, mobile data speeds could reach up to 1.4Gbit/s.

Now, that's very fast when compared with the current 4G networks which can boast speeds of anywhere between 8 - 50 MB/s in ideal circumstances.

But, here's where things get interesting. How fast is the read / write speed for your on-board hard drive? We're very lucky to live in an age where increases in speed and capacity come in leaps and bounds in personal computing. I remember getting my first flash drive back in 2003. It cost £50, and stored 128mb of data - and it was USB!

NOTHING CAN TOP THE TECHNOLOGY OF 2003.

5G vs. your hard drive

Let’s look at something more modern…

The hard drive in my laptop (a 2017 15" Macbook Pro) is the APPLE SSD SM0512L. I think it's fair to say that Macbooks like this are still very much the workhorse for most Node.js and JavaScript developers, so I think it's fair to examine its capabilities. According to userbenchmark.com, this hard drive can write large files at an average speed of 477MB/s

Let's compare those two numbers again. 1.4Gbit/s vs 477MB/s.

(Just to be clear, I am aware that bits and bytes are different from one another. If we convert the megabits per seconds into a write speed, it’s around 160MB/s. So, far slower than my onboard drive, but also, potentially infinite…)

If 5G networks do become as pervasive and reliable as we might envisage, one could start to imagine a world where all but the bare minimum of storage is completely offloaded onto the cloud platforms.

Now, I'm one of those guys that when an interesting question is asked, or a challenge presented, I start to get an itch. I have to look at how something might be made. I'm also very lucky to have a job that lets me tinker across pretty much any area of tech that I like. So I started thinking... is it possible to do this today?

Pictured: Me pondering...

Pictured: Me pondering...

The Internet today...

Now, even if you have "super fast" internet in your home and/or office, it's probably not going to be as fast as 5G promises to be, and probably not even as fast as the read/write speed of your hard drive is now. This is why we still carry giant capacity hard drives in our laptops, right?

To give you a sense of what I have to work with... the down speed of the connection in the WeWork my team is based in is 28Mbp/s. In the Costa Coffee that I like to frequent near my house, it's around 4Mbp/s. OK, not great, we're going to have to learn patience, but as we all know, this will get better with time. We can still write the software that replaces our hard drives with object storage, and then we just have to sit back and wait for it to get better. Remember, streaming films online might have seemed insane in 2005 when Netflix started offering a streaming service and average UK connection speeds were 2Mbp/s, but look where we are today.

What exists now?

So, I started looking around at current examples of online storage that have a home on personal computing OSs.

Dropbox is a favourite storage service for a lot of people, and I think it was really the first company to capture the potential of cloud storage for the masses. Dropbox offers a client for Linux, Windows, and MacOS. It creates a folder on your OS which the application watches and then syncs to your Dropbox when it notices a change in the files and folders in there. This felt like a cheat to me, sure it's a good solution, but it doesn't interact with the OS, it watches and when the OS makes a change, it takes an action to reflect that elsewhere.

So I looked around for alternatives...

Pictured: Me, looking for alternatives.

Pictured: Me, looking for alternatives.

How do you mount a virtual drive that you can hook into the native events of the operating system and offload them to the cloud instead of a physical medium? Well, normally, you'd write a software driver which hooks into the system and lets you take control of actions. Y'know, just a little dabbling around of kernel code and you're good to go.

I don't know about you, but I've never come close to writing a device driver, the kernel is something that other people deal with. I'm a Node.js developer - I live in userspace.

So, end of story, right? Nope! While stumbling around the Google I came across FUSE, which stands for Filesytem in USErspace. This is a piece of software which feels very much at home in *nix systems, and amongst other things enables developers to mount a virtual volume in userspace that software applications can hook into!

Pictured: A screenshot of the FUSE for macOS site.

Pictured: A screenshot of the FUSE for macOS site.

Excellent! Just what I needed. By chance, it turned out I already had FUSE installed on my Macbook as I'd needed to mount an EXT4 Filesystem (popular with Debian-based Linux distributions) and FUSE enabled this too.

Let's build it!

So, it's possible to do - I just need to be able to do it in a language that I know...

- C? - lol, no.

- Go? - Possible, but I'm still learning

- Python? - Good support, could have done it with Python, hate the module ecosystem.

- JavaScript? - I love npm, and JavaScript is by far the language I'm most comfortable with.

JavaScript it is!

So, let's look around and see if there's a module I can install that will help me get on my way without having to do too much reading.

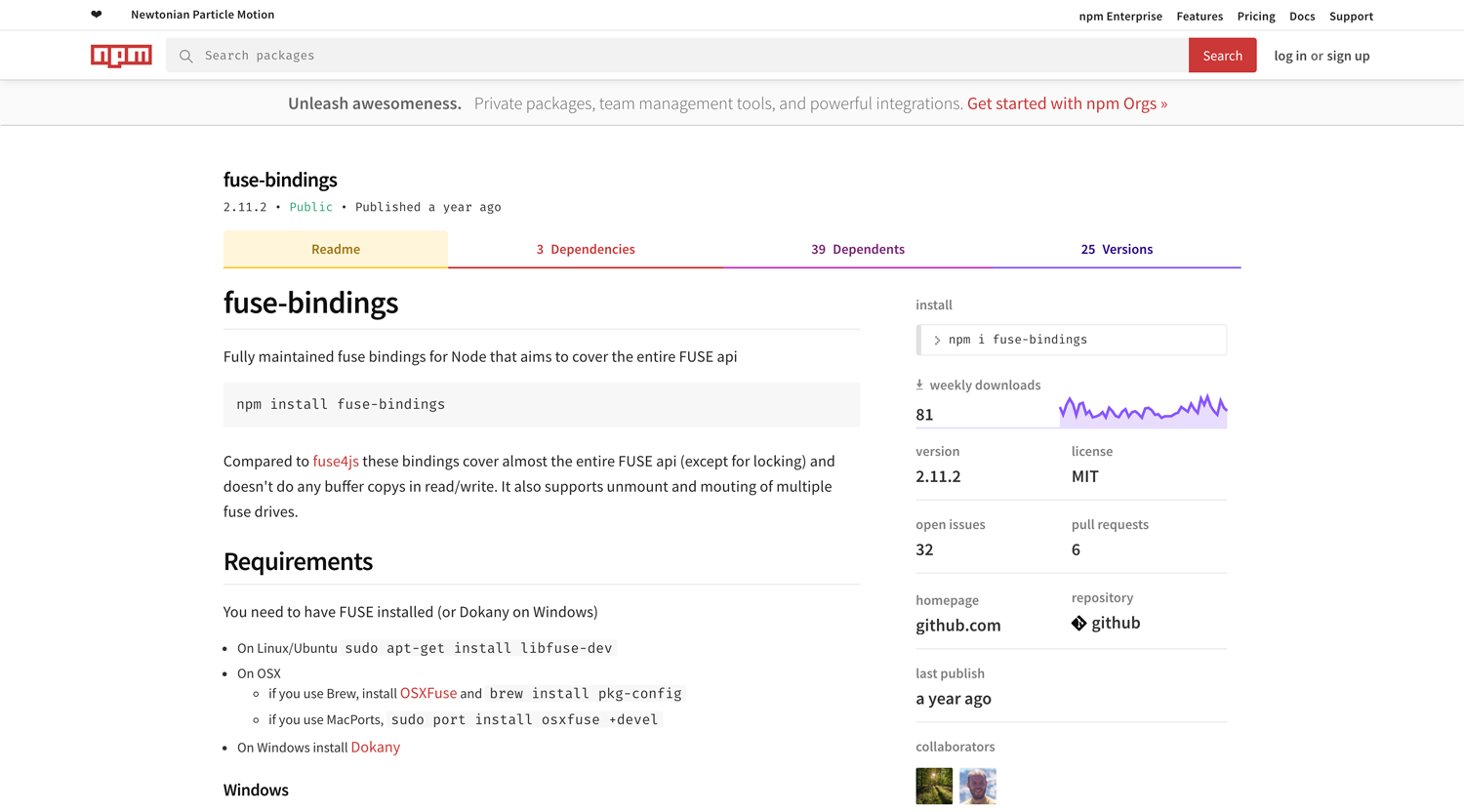

After a quick search on the Googles, I came across "fuse-bindings". There are a bunch of efforts on npm at wrapping Fuse bindings in Node.js code that we can interface with, but this module had the most complete feature set I came across.

Pictured: The fuse-bindings npm page

Pictured: The fuse-bindings npm page

The code

So, how does it work? The setup is pretty simple. To mount a Fuse volume, you simply pass a directory which (as far as I can tell) works as a proxy for the fuse bindings to hook into.

const fuse = require('fuse-bindings');

const mountPath = process.platform !== 'win32' ? './mnt' : 'M:\\'

fuse.mount(mountPath, {});

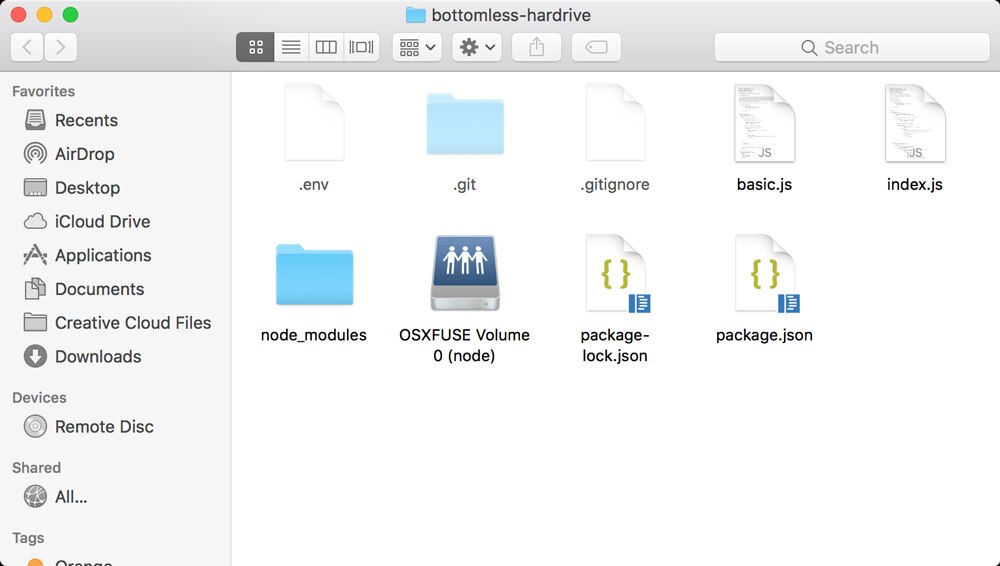

And you'll get something like this:

Cracking! We've got a volume that we can play with. Let's shoot back to that code snippet.

fuse.mount(mountPath, {});

The second argument here is an object that we can pass through a bunch of functions that will be executed when your operating system tries to do a certain action like a read or write.

It looks a little bit like this:

fuse.mount(mountPath, {

readdir: (path, cb) => {},

getattr: (path, cb) => {},

open: (path, flags, cb) => {},

read: (path, fd, buf, len, pos, cb) => {}

})

Those are the minimum functions you need to spoof a read-only filesystem on macOS. As you can see, the object is just a list of functions, so in a way this feels much more like writing a C++ program to me than writing JavaScript - and I can see why that's happened, Fuse is, at it's core, C++. I think this interface was kept so that C++ developers would feel a little more at home, but personally, this doesn't feel right to me.

OK, so let's work through proxying the read side of a Fuse volume up to a cloud platform.

First up: readdir.

So, this function is called whenever an OS tries to get a list of items in a directory. All it wants returned is an array of paths that relate to files and directories. Okie dokie, easy enough, we can just use `listObjects` to get that.

// readdir function

(path, cb) => {

if (path === '/') {

new Promise( (resolve, reject) => {

const pathPrefix = path === '/' ? '' : path;

var params = {

Bucket: process.env.BUCKET_NAME,

Prefix : pathPrefix

};

console.log('readdir params:', params);

S3.listObjects(params, (err, data) => {

if (err) {

console.log('listObjects err', err, params);

reject();

} else {

const files = data.Contents.map(obj => { return `${obj.Key}` } );

resolve(files);

};

});

})

.then(files => {

cb(0, files);

});

} else {

cb(0);

}

}

Cool, that's that out of the way. Next up getattr.

So, this function is called when the OS wants to get details about a file or directory. So, read/write permissions, dates created/modified etc. So, that's a pretty easy choice to replace, we can use `headObject` to get most of those details:

// readdir function

(path, cb) => {

if (path === '/') {

cb(0, {

mtime: new Date(),

atime: new Date(),

ctime: new Date(),

nlink: 1,

size: 100,

mode: 16877,

uid: process.getuid ? process.getuid() : 0,

gid: process.getgid ? process.getgid() : 0

})

} else {

S3.headObject({

Bucket: process.env.BUCKET_NAME,

Key : adjustPath(path)

}, function(err, data) {

if(err){

cb(0, {

mtime: new Date(),

atime: new Date(),

ctime: new Date(),

nlink: 1,

size: 0,

mode: 33188,

uid: process.getuid ? process.getuid() : 0,

gid: process.getgid ? process.getgid() : 0

});

} else {

cb(0, {

mtime: new Date(),

atime: new Date(),

ctime: new Date(),

nlink: 1,

size: data.ContentLength,

mode: 33188,

uid: process.getuid ? process.getuid() : 0,

gid: process.getgid ? process.getgid() : 0

})

}

});

}

}

Next up open:

This is the simplest of the methods called. It's called when the OS tries to open a file. It gets passed a path and expects a file descriptor, which we can give it with the following:

// open function

function (path, flags, cb) {

cb(0, 42);

}

Easy-peasy-lemon-squeezy.

And we're 3/4 of the way to creating a bottomless (admittedly read-only at this point) hard drive that uses object storage. Neat, huh?

Last function we need is perhaps the most important, it's the read function. Any guesses as to what this does?

// read functions

(path, fd, buf, len, pos, cb) => {

const params = {

Bucket: process.env.BUCKET_NAME,

Key: adjustPath(path),

Range : `bytes=${pos}-${pos + len}`

};

S3.getObject(params, (err, data) => {

if(err){

return cb(0);

} else {

data.Body.copy(buf, 0, 0, data.Body.length);

return cb(buf.length);

}

});

}

And that's that!

Take aways

- This is hard

- Because of point 1, there's little documentation

- The OS does not like being f***ed with.

I've spent countless hours tinkering and prodding to get this to work. It's not surprising that this sort of thing isn't commonplace yet, but I think it will be.

I'm not an expert at file system operations. I ask the system to write me a file, and it writes me a file. This is a way lower level than I've ever really played with before.

No file descriptor? - "LOCK IT DOWN, LOCK IT ALL DOWN. MORTAL, YOU DON'T KNOW WHAT YOU'RE DOING AND IT'S TIME FOR YOU TO RESTART AGAIN" (that's your computer chastising you, btw). It was quicker to reboot my mac than it was for the OS to give me back my file explorer, and the FUSE driver doesn't like to vocal about what you've done wrong

Next steps

With all of that, I've got the basic read side of things out of the way. I'd like to flesh out some of the more obscure read operations that are mentioned in passing in the module's docs. Following that, getting some sort of write functionality up and running would be neat too. You can be sure I'll be updating readers as and when that happens.