This post has been migrated from its original home to here. It originally lived at

this location.

"In computing, ambient intelligence (AmI) refers to electronic environments that are sensitive and responsive to the presence of people". Thanks Wikipedia, couldn't have said it better myself.

Ambient computing is all around us and it comes in many forms. One form you may be familiar with is the location-based services on your mobile phone.

Google Now, for example, takes where you are, your most recent searches, your personal habits (places you've eaten, amount spent etc.) and will recommend restaurants that you might like to go to after the cinema. (Which it knows you bought a ticket for because you got a receipt in the email, and besides, it's Wednesday! It's movie night!)

You never directly input any of this information; an algorithm observes you and your tastes and tries to help you out before you even realise you want help in the first place.

This form of ambient computing is known as "anticipatory" and it's just one way that AmI has made it into our lives without us even realising – after all, that's the point.

The best way to learn more about a topic is to dive straight in and make something, so we made Ambient Lionel.

Ambient Lionel's life is a simple one. He listens to the world around him and if he hears the word "Hello", he's naturally going to assume that you're talking to him. He'll think about it for a second or two and then answer you with his famous line: "Is it me you're looking for?"

How does it work?

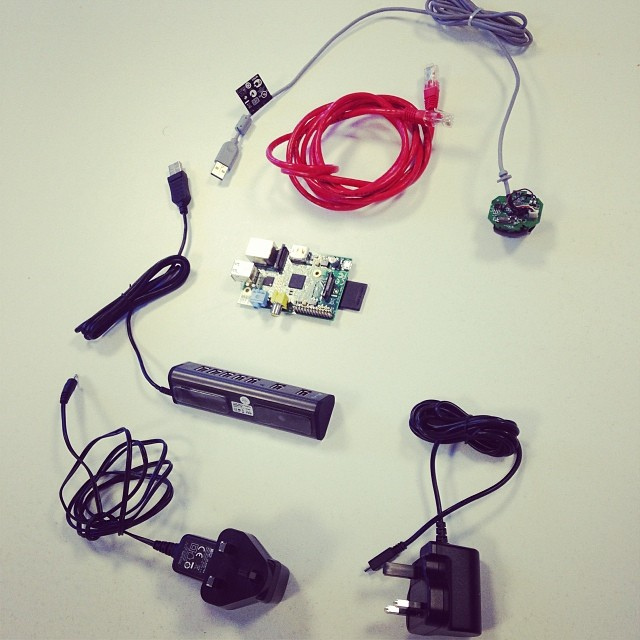

We started with one of our Raspberry Pis which, given how small they are, and the fair amount of computing power they have, seemed to be the perfect fit for this project.

Initially, we wanted to try and run the speech recognition on the Raspberry Pi itself, so we spent about a week setting up and coding for CMU Sphinx. CMU Sphinx is an open source library for handling speech recognition; Apple and Google will run software similar to (but likely not the same as) Sphinx in order to deliver their voice recognition services. Even though we were only looking for one word amongst many, the time it took for the Pi to single out and process what had been said was far too long for it to be of any use (~20-30 seconds for a match). To try and shorten this we overclocked the Pi to constantly run at the 1GHz max it can achieve and it still only shaved off five seconds.

Ultimately, we had to get the voice recognition done on a more powerful machine. We thought about running the Sphinx software remotely and feeding back the information to our Pi, but the set-up was far too tricky and had the potential to get expensive.

Eventually, we stumbled across an undocumented Google Voice API that transcribes audio files that are sent to it and returns the text to a program. Great! Just what we were looking for. We wrote a script that constantly takes two-second audio grabs of the sounds that it can hear, sends them off to for transcribing, then, if the word "Hello" is found, plays our audio track.

Say you, say me?

It works fairly well for my voice, although like any new technology it's not perfect when it comes to reliability or accuracy. We estimate it works about 80% of the time. When you think about the variety of voices, intonations and accents you can say "Hello" in, and then the different levels of background noise that have to be filtered out, that's pretty good.

It may not be failsafe yet, but a small unobtrusive box that's constantly listening for calls of help could be very useful in my gran's flat in case she falls again. However, I'm sure she'd much rather have a full (if more lifelike) version of Lionel to stare and gaze at 'all night long' instead.

Our Python code for Ambient Lionel is on Github.

And you can see more of our innovation projects on our Labs site